The Real ROI of AI

Are You Measuring AI's Impact All Wrong?

As a founder, I spend my days speaking with engineering managers and leaders. "How do we measure the ROI of AI?" has been a recurring question lately.

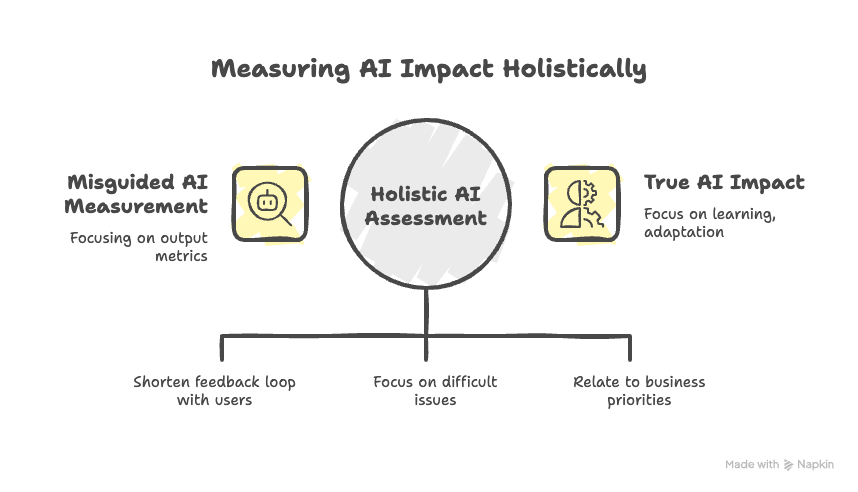

The pressure from leadership is understandable given that coding assistants and other AI tools cost $10 to $20 per developer per month. They would like to see a return on a new line item that has been added to the budget. Lines of code generated, completion acceptance rates, or a nebulous notion of "individual developer productivity" appear to be the preferred metrics.

I'm a product person at heart, so this seems a little strange. We seem to be measuring results rather than output. It brings to mind the early days of the internet, but with one significant, costly difference.

Reminiscent of the Geocities Era

Remember the late 1990s? The internet was a wild, uncharted territory. The economic situation was entirely different. The web itself was free, the tools were a basic text editor, and learning HTML was something you did at your own pace.

No one inquired about the return on investment of learning how to write a tag or use a keyboard. Only time was spent on the actual experimentation, which included modifying code and experimenting with design. The early web companies were more concerned with the business outcomes that HTML enabled than they were with the return on investment of a particular technology. Would you be able to create an online bookshop? A forum for the community? A website for auctions? The technology was a means to a much bigger, more exciting end.

Instead of optimizing an existing procedure, the emphasis was on discovery and creating new things.

The $20/Month Question

Let's fast-forward to today. It's a different landscape. AI is not a free-for-all. There is a monthly subscription fee associated with it, and that cost needs to be justified. Therefore, we rely on output metrics, which are simple to measure.

However, what if the true benefit isn't a 10% speedup in boilerplate code writing for engineers? Our primary concern as we develop EvolveDev.io is that leaders lack the visibility to see the big picture, data is siloed, and engineering teams are overworked.

Adding an "AI productivity" metric on top of that doesn't address the root cause. It merely adds another piece of data for tracking in a spreadsheet. Your engineering team's story points may not even touch on the true effects of AI.

The Hidden Value of AI: The Work That Never happens

Ignore code lines.

When AI enables your team to learn more quickly, that is when it truly makes an impact. Weeks of engineering work can be saved by a PM using a fast prototype to disprove a bad idea.

AI didn't write any production code in the aforementioned instance. Neither your deployment frequency nor your cycle time improved as a result.

Perhaps more valuable, though, was what it accomplished: it preserved dozens of hours of valuable, concentrated engineering time. An idea was rejected by the PM before it was ever added to the backlog.

This is the ROI that is hidden. It's the labor that isn't done because AI made it possible for someone else to learn and move more quickly. Your developers can now concentrate on the important things.

The real payoff is the expensive, wasteful labor that AI completely eliminates.

We are not in the optimization phase yet; we are in the discovery phase.

This leads me to my central conviction on the subject. Trying to show the immediate, line-item ROI of AI tools today is similar to trying to justify the ROI of that first HTML textbook in 1998. For the stage we're in, that question is inappropriate.

We are not in the optimization stage yet; we are in the discovery stage.

We should concentrate on increasing our organization's ability to learn and adapt rather than pursuing micro-optimizations. The best way to assess AI tools is to look at how they help your entire product development organization learn, not just what they help your engineers write.

Instead, pose these queries:

How much quicker can we validate novel concepts?

Is the feedback loop between an idea and actual user input being shortened?

Are we releasing our senior engineers from disruptions so they can concentrate on the more difficult issues?

As we develop EvolveDev.io, I am well-versed in the challenge of measuring this holistically. If your team communicates in Slack, your code is in GitHub, and your project data is in Jira, you won't be able to see these cross-functional benefits. You must observe how organizational efforts relate to business priorities and how the use of new tools is changing the allocation of those efforts.

So let's stop using a stopwatch to measure AI. Let's begin gauging it by the new opportunities it opens up.

See the Full Picture, Not Just the Pixels

We understand the pressure engineering leaders face to justify new tools and prove ROI. But when the true impact of AI—like the costly work it helps you avoid—is invisible across scattered data silos, you're forced to rely on vanity metrics. EvolveDev.io is built to connect these dots, giving you the holistic visibility to see how new technologies are fundamentally changing your team's capacity to innovate.

It's time to leverage an engineering intelligence platform that provides the clarity to measure what truly matters, shifting the focus from simple outputs to transformative outcomes.